| | |

| |

| ���ھ�����Mapreduce |

|

[ 2013/4/25 15:48:00 | By: ����� ] |

(������������ϣ�����)

һ���������

K-means�㷨��Ӳ�����㷨�������͵ľ���ԭ�͵�Ŀ�꺯��������Ĵ������������ݵ㵽ԭ�͵�ij�־�����Ϊ�Ż���Ŀ�꺯�������ú�����ֵ�ķ����õ���������ĵ�������K-means�㷨��ŷʽ������Ϊ���ƶȲ�����������Ӧijһ��ʼ������������V���з��࣬ʹ������ָ��J��С���㷨�������ƽ����������Ϊ����������

K-means�㷨�Ǻܵ��͵����ھ���ľ����㷨�����þ�����Ϊ�����Ե�����ָ�꣬����Ϊ��������ľ���Խ���������ƶȾ�Խ���㷨��Ϊ�����ɾ��뿿���Ķ�����ɵ�����˰ѵõ������Ҷ����Ĵ���Ϊ����Ŀ����

����k����ʼ��������ĵ��ѡȡ�Ծ��������нϴ��Ӱ�죬��Ϊ�ڸ��㷨��һ�����������ѡȡ����k��������Ϊ��ʼ��������ģ���ʼ�ش���һ���ء����㷨��ÿ�ε����ж����ݼ���ʣ���ÿ��������������������ĵľ��뽫ÿ���������¸�������Ĵء����������������ݶ����һ�ε���������ɣ��µľ������ı���������������һ�ε���ǰ������ָ��J��ֵû�з����仯��˵���㷨�Ѿ�������

��������˼��

1.��ѧ����

����dάʵ������( )������ͽ����ʵ�������������ɣ��̣�K-Means�㷨����������ƶ��IJ���k������Щ�㻮�ֳ�k��Cluster(k �� n)�������ֵı�����С������Cluster����(��ֵ)�ľ���ƽ���ͣ�������ЩClusterΪ�� )������ͽ����ʵ�������������ɣ��̣�K-Means�㷨����������ƶ��IJ���k������Щ�㻮�ֳ�k��Cluster(k �� n)�������ֵı�����С������Cluster����(��ֵ)�ľ���ƽ���ͣ�������ЩClusterΪ�� ������ѧ�������£� ������ѧ�������£�

������ ������ Ϊ��i��Cluster�ġ����ġ�(Cluster�����е��ƽ��ֵ)�� Ϊ��i��Cluster�ġ����ġ�(Cluster�����е��ƽ��ֵ)��

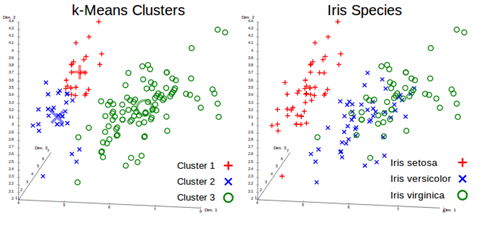

�����Ч��������ͼ��

����ɼ���http://en.wikipedia.org/wiki/K-means_clustering

2.K-means�㷨

����һ�ֵ������㷨��

(1)���������ȸ�����kֵ������ʼ���֣��õ�k��Cluster�����磬�������ѡ��k������Ϊk��Cluster���������ֻ�����Canopy Clustering�õ���Cluster��Ϊ��ʼ����(��Ȼ���ʱ��k��ֵ��Canopy Clustering�ý������)��

(2)������ÿ���㵽����Cluster���ĵľ������������뵽������Ǹ�Cluster��

(3)�����¼���ÿ��Cluster��������

(4)���ظ�����2~3��ֱ������Cluster������ij�����ȷ�Χ�ڲ��仯���ߴﵽ������������

���㷨���ܶิ���㷨��ʵ��Ч�����������������������ľֲ��ԽϺã����ײ��л����Դ��ģ���ݼ��������壻�㷨ʱ�临�Ӷ��ǣ�O(nkt)�����У�n �Ǿ���������k ��Cluster������t �ǵ���������

�������л�K-means

K-Means�Ϻõؾֲ���ʹ���ܺܺõı����л�����һ�Σ�����Cluster�Ĺ��̿��Բ��л�������Slaves��ȡ���ڱ��ص����ݼ����������㷨����Cluster���ϣ����������Cluster�������ɵ�һ�ε�����ȫ��Cluster���ϣ�Ȼ���ظ��������ֱ����������������ڶ��Σ���֮ǰ�õ���Cluster���о��������

��map-reduce�����ǣ�datanode��map�ζ���λ�ڱ��ص����ݼ������ÿ���㼰���Ӧ��Cluster��combiner������λ�ڱ��ذ�������ͬCluster�еĵ����reduce�����������reduce�����õ�ȫ��Cluster���ϲ�д��HDFS��

�ġ�Mahout��K-means

mahoutʵ���˱�K-Means Clustering��˼����ǰ����ͬ��һ��ʹ����2��map������1��combine������1��reduce������ÿ�ε�������1��map��1��combine��һ��reduce�����õ�������ȫ��Cluster���ϣ�������������һ��map���о��������

1.���ݽṹģ��

Mahout�����㷨��������Vector�ķ�ʽ��ʾ����ͬʱ֧��dense vector��sparse vector��һ�������ֱ�ʾ��ʽ������ӵ�й�ͬ�Ļ���AbstractVector������ʵ�����й�Vector�ĺܶ��������

(1)��DenseVector

��ʵ�ֵ�ʱ����һ��double�����ʾVector��private double[] values���� ����dense data����ʹ������

(2)��RandomAccessSparseVector

��������ʾһ������������ʵ�sparse vector��ֻ�洢����Ԫ�أ����ݵĴ洢����hashӳ�䣺OpenIntDoubleHashMap;

����OpenIntDoubleHashMap����keyΪint���ͣ�valueΪdouble���ͣ������ͻ�ķ�����double hashing��

(3)��SequentialAccessSparseVector

��������ʾһ��˳����ʵ�sparse vector��ͬ��ֻ�洢����Ԫ�أ����ݵĴ洢����˳��ӳ�䣺OrderedIntDoubleMapping;

����OrderedIntDoubleMapping����keyΪint���ͣ�valueΪdouble���ͣ��洢�ķ�ʽ����������Libsvm���ݱ�ʾ����ʽ������Ԫ������:����Ԫ�ص�ֵ��������һ��int����洢indices����double����洢����Ԫ�أ�Ҫ���дij��Ԫ�أ���Ҫ��indices�в���offset������indicesӦ��������ģ����Բ��Ҳ����õ������ַ���

2.K-means��������

���Դ�Cluster.java���丸�࣬����Cluster��mahoutʵ����һ��������AbstractCluster��װCluster������˵�����Բο���һƪ���£�����������˵����

(1)��private int id; #ÿ��K-Means�㷨������Cluster��id

(2)��private long numPoints; #Cluster�а�����ĸ���������ĵ㶼��Vector

(3)��private Vector center; #Cluster�����ģ��������ƽ��ֵ����s0��s1���������

(4)��private Vector Radius; #Cluster�İ뾶������뾶�Ǹ�����ı����ӳ���ڸ�������ɢ�̶ȣ���s0��s1��s2���������

(5)��private double s0; #��ʾCluster�������Ȩ��֮�ͣ�

(6)��private Vector s1; #��ʾCluster������ļ�Ȩ�ͣ�

(7)��private Vector s2; #��ʾCluster������ƽ���ļ�Ȩ�ͣ�

(8)��public void computeParameters(); #����s0��s1��s2����numPoints��center��Radius��

%7ds0)

�⼸����������Ҫ����������ܱ�Ҫ���ں������˵����

(9)��public void observe(VectorWritable x, double weight); #ÿ����һ���µĵ���뵱ǰClusterʱ����Ҫ����s0��s1��s2��ֵ

(10)��public ClusterObservation getObservations(); #���������combine����ʱ�ᾭ�����õ������᷵����s0��s1��s2��ʼ����ClusterObservation����ʾ��ǰCluster�а��������б��۲���ĵ�

3.K-means��Map-Reduceʵ��

K-Means Clustering��ʵ��ͬ�������������MR�����汾��������Ͳ�˵�ˣ�MR����������map������һ��combine������һ��reduce��������ͨ��������ͬ��job��������Dirver����֯�ģ�map��reduce��ִ��˳���ǣ�

(1)����K��ʼ�����γ�

K-Means�㷨��Ҫһ�������ݵ�ij�ʼ���֣�mahout���������ַ�������Iris datasetǰ3��featureΪ������

A��ʹ��RandomSeedGenerator��

��ָ��clustersĿ¼����k����ʼ���ֲ���Sequence File��ʽ�洢����ѡ��ϣ���ܾ����ܲ��ù�������ΪCluster���ģ�����������£�

ͼ2

B��ʹ��Canopy Clustering

Canopy Clustering���������Գ�ʼ������һ�����ԵĻ��֣����Ľ������Ϊ֮����۽ϸ߾����ṩ������Canopy Clustering������������Ԥ������Ҫ�ȵ������������������������K-Means��˵�ṩkֵ������ܺܺõĴ�������������Ȼ����Ҫ�˹�ָ���IJ�����k�����T1��T2��T1��T2�����������ȱһ���ɵģ�T1������ÿ��Cluster���������Ŀ����ֱ��Ӱ����Cluster�ġ����ġ��͡��뾶������T2�������Cluster����Ŀ��T2̫��ᵼ��ֻ��һ��Cluster����̫С�����ֹ����Cluster��ͨ��ʵ�飬T1��T2ȡֵ������Ӱ�쵽�㷨��Ч�������ȷ��T1��T2���ƺ�������AIC��BIC���߽�����֤ȥ��������

(2).����Cluster��Ϣ

K-Means�㷨��MRʵ�֣���һ�ε�����Ҫ�������������Canopy Clustering�������Ŀ¼��Ϊkmeans��һ�ε���������Ŀ¼����������ÿ�ε�������Ҫ���ϴε��������Ŀ¼��Ϊ���ε���������Ŀ¼�������Ҫ����ÿ��kmeans map��kmeans reduce����ǰ�Ӹ�Ŀ¼�õ�Cluster����Ϣ�����������KMeansUtil.configureWithClusterInfoʵ�֣�������ָ����HDFSĿ¼��Canopy Cluster�����ϴε���Cluster����Ϣ�洢��һ��Collection�������������֮���ÿ��map��reduce�����ж���Ҫ��

(3).KMeansMapper

public class KMeansMapper extends Mapper<WritableComparable<?>, VectorWritable, Text, ClusterObservations> {

private KMeansClusterer clusterer;

private final Collection<Cluster> clusters = new ArrayList<Cluster>();

@Override

protected void map(WritableComparable<?> key, VectorWritable point, Context context)

throws IOException, InterruptedException {

this.clusterer.emitPointToNearestCluster(point.get(), this.clusters, context);

}

@Override

protected void setup(Context context) throws IOException, InterruptedException {

super.setup(context);

Configuration conf = context.getConfiguration();

try {

ClassLoader ccl = Thread.currentThread().getContextClassLoader();

DistanceMeasure measure = ccl.loadClass(conf.get(KMeansConfigKeys.DISTANCE_MEASURE_KEY))

.asSubclass(DistanceMeasure.class).newInstance();

measure.configure(conf);

this.clusterer = new KMeansClusterer(measure);

String clusterPath = conf.get(KMeansConfigKeys.CLUSTER_PATH_KEY);

if (clusterPath != null && clusterPath.length() > 0) {

KMeansUtil.configureWithClusterInfo(conf, new Path(clusterPath), clusters);

if (clusters.isEmpty()) {

throw new IllegalStateException("No clusters found. Check your -c path.");

}

}

} catch (ClassNotFoundException e) {

throw new IllegalStateException(e);

} catch (IllegalAccessException e) {

throw new IllegalStateException(e);

} catch (InstantiationException e) {

throw new IllegalStateException(e);

}

}

void setup(Collection<Cluster> clusters, DistanceMeasure measure) {

this.clusters.clear();

this.clusters.addAll(clusters);

this.clusterer = new KMeansClusterer(measure);

}

}

A��KMeansMapper���յ���(WritableComparable<?>, VectorWritable) Pair��setup��������KMeansUtil.configureWithClusterInfo�õ���һ�ε�����Clustering�����map������Ҫ����������������

B��ÿ��slave������ֲ�ʽ�Ĵ�������Ӳ���ϵ����ݣ�����֮ǰ�õ���Cluster��Ϣ����emitPointToNearestCluster������ÿ������뵽������������Cluster��������Ϊ(�뵱ǰ��������Cluster��ID, �ɵ�ǰ���װ���ɵ�ClusterObservations) Pair,ֵ��ע�����Mapperֻ�ǽ�����������Cluster������(key,value)��ʽע���˵����������cluster���ȴ�combiner��reducer�Ѽ���û�и���Cluster���ĵȲ�����

(4).KMeansCombiner

public class KMeansCombiner extends Reducer<Text, ClusterObservations, Text, ClusterObservations> {

@Override

protected void reduce(Text key, Iterable<ClusterObservations> values, Context context)

throws IOException, InterruptedException {

Cluster cluster = new Cluster();

for (ClusterObservations value : values) {

cluster.observe(value);

}

context.write(key, cluster.getObservations());

}

}

combiner������һ�����ص�reduce������������map֮��reduce֮ǰ��

(5).KMeansReducer

public class KMeansReducer extends Reducer<Text, ClusterObservations, Text, Cluster> {

private Map<String, Cluster> clusterMap;

private double convergenceDelta;

private KMeansClusterer clusterer;

@Override

protected void reduce(Text key, Iterable<ClusterObservations> values, Context context)

throws IOException, InterruptedException {

Cluster cluster = clusterMap.get(key.toString());

for (ClusterObservations delta : values) {

cluster.observe(delta);

}

// force convergence calculation

boolean converged = clusterer.computeConvergence(cluster, convergenceDelta);

if (converged) {

context.getCounter("Clustering", "Converged Clusters").increment(1);

}

cluster.computeParameters();

context.write(new Text(cluster.getIdentifier()), cluster);

}

@Override

protected void setup(Context context) throws IOException, InterruptedException {

super.setup(context);

Configuration conf = context.getConfiguration();

try {

ClassLoader ccl = Thread.currentThread().getContextClassLoader();

DistanceMeasure measure = ccl.loadClass(conf.get(KMeansConfigKeys.DISTANCE_MEASURE_KEY))

.asSubclass(DistanceMeasure.class).newInstance();

measure.configure(conf);

this.convergenceDelta = Double.parseDouble(conf.get(KMeansConfigKeys.CLUSTER_CONVERGENCE_KEY));

this.clusterer = new KMeansClusterer(measure);

this.clusterMap = new HashMap<String, Cluster>();

String path = conf.get(KMeansConfigKeys.CLUSTER_PATH_KEY);

if (path.length() > 0) {

Collection<Cluster> clusters = new ArrayList<Cluster>();

KMeansUtil.configureWithClusterInfo(conf, new Path(path), clusters);

setClusterMap(clusters);

if (clusterMap.isEmpty()) {

throw new IllegalStateException("Cluster is empty!");

}

}

} catch (ClassNotFoundException e) {

throw new IllegalStateException(e);

} catch (IllegalAccessException e) {

throw new IllegalStateException(e);

} catch (InstantiationException e) {

throw new IllegalStateException(e);

}

}

private void setClusterMap(Collection<Cluster> clusters) {

clusterMap = new HashMap<String, Cluster>();

for (Cluster cluster : clusters) {

clusterMap.put(cluster.getIdentifier(), cluster);

}

clusters.clear();

}

public void setup(Collection<Cluster> clusters, DistanceMeasure measure) {

setClusterMap(clusters);

this.clusterer = new KMeansClusterer(measure);

}

}

��ֱ�ĵIJ�����ֻ����setup��ʱ���Ը��ӡ�

A��setup������Ŀ���Ƕ�ȡ��ʼ���ֻ����ϴε����Ľ��������Cluster��Ϣ��ͬʱ����Map<Cluster��ID,Cluster>ӳ�䣬�����ID��Cluster��

B��reduce�����dz�ֱ�ף�����combiner������<Cluster ID��ClusterObservations>���л��ܣ�

computeConvergence�����жϵ�ǰCluster�Ƿ����������µġ����ġ����ϵġ����ġ������Ƿ�����֮ǰ����ľ���Ҫ��

ע��и�cluster.computeParameters()��������������dz���Ҫ������֤�˱��ε����Ľ������Ӱ�쵽�´ε�����Ҳ���DZ�֤���ܹ������¼���ÿ��Cluster�����ġ���һ������

%7ds0)

ǰ���������õ��µ�Cluster��Ϣ��

�������������S0��S1��S2��Ϣ����֤�´ε��������Cluster��Ϣ�ǡ��ɾ�������

֮��reduce��(Cluster ID, Cluster) Pairд�뵽HDFS���ԡ�clusters-�����������������ļ����У����������ʱ��ʹ�á�

Reduce�����Ѽ�ǰ��Combiner�������Ϣ������һ�ζ�Canopy���ĵ���Ϣ�����˸���

(6).KMeansClusterMapper

֮ǰ��MR�������ڹ���Cluster��Ϣ��KMeansClusterMapper���ù���õ�Cluster��Ϣ�����ࡣ

public class KMeansClusterMapper

extends Mapper<WritableComparable<?>,VectorWritable,IntWritable,WeightedVectorWritable> {

private final Collection<Cluster> clusters = new ArrayList<Cluster>();

private KMeansClusterer clusterer;

@Override

protected void map(WritableComparable<?> key, VectorWritable point, Context context)

throws IOException, InterruptedException {

clusterer.outputPointWithClusterInfo(point.get(), clusters, context);

}

@Override

protected void setup(Context context) throws IOException, InterruptedException {

super.setup(context);

Configuration conf = context.getConfiguration();

try {

ClassLoader ccl = Thread.currentThread().getContextClassLoader();

DistanceMeasure measure = ccl.loadClass(conf.get(KMeansConfigKeys.DISTANCE_MEASURE_KEY))

.asSubclass(DistanceMeasure.class).newInstance();

measure.configure(conf);

String clusterPath = conf.get(KMeansConfigKeys.CLUSTER_PATH_KEY);

if (clusterPath != null && clusterPath.length() > 0) {

KMeansUtil.configureWithClusterInfo(conf, new Path(clusterPath), clusters);

if (clusters.isEmpty()) {

throw new IllegalStateException("No clusters found. Check your -c path.");

}

}

this.clusterer = new KMeansClusterer(measure);

} catch (ClassNotFoundException e) {

throw new IllegalStateException(e);

} catch (IllegalAccessException e) {

throw new IllegalStateException(e);

} catch (InstantiationException e) {

throw new IllegalStateException(e);

}

}

}

A��setup��Ȼ�Ǵ�ָ��Ŀ¼��ȡ������Cluster��Ϣ��

B��map����ͨ������ÿ���㵽��Cluster�����ġ��ľ�����ɾ�����������Կ���map�������������е�Ͷ�������Ψһһ����֮���������Cluster���ˣ����֮����Ҫreduce������

(7).KMeansDriver

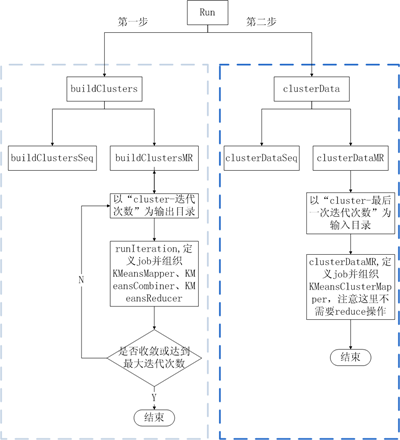

����ֵ��ע�����buildCluster�еĵ������̣�runIteration������ǰ��KMeanMapper,KMeansCombiner,KMeanReducer����job�IJ�����

����buildCluster���룺

private static Path buildClustersMR(Configuration conf,

Path input,

Path clustersIn,

Path output,

DistanceMeasure measure,

int maxIterations,

String delta) throws IOException, InterruptedException, ClassNotFoundException {

boolean converged = false;

int iteration = 1;

while (!converged && iteration <= maxIterations) {

log.info("K-Means Iteration {}", iteration);

// point the output to a new directory per iteration

Path clustersOut = new Path(output, AbstractCluster.CLUSTERS_DIR + iteration);

converged = runIteration(conf, input, clustersIn, clustersOut, measure.getClass().getName(), delta);

// now point the input to the old output directory

clustersIn = clustersOut;

iteration++;

}

return clustersIn;

}

�����ǰ���KMeansMapper��KMeansCombiner��KMeansReducer��KMeansClusterMapper������ש�Ļ���KMeansDriver���ǸǷ��ӵ��ˣ���������֯����kmeans�㷨����(�����������MR��)��ʾ��ͼ���£�

ͼ4 ͼ4

http://www.cnblogs.com/biyeymyhjob/archive/2012/07/20/2599544.html

======== Mahout�������

ʲô�Ǿ��������

���� (Clustering) ���ǽ����ݶ�������Ϊ�������ߴ� (Cluster)������Ŀ���ǣ���ͬһ�����еĶ���֮����нϸߵ����ƶȣ�����ͬ���еĶ�����ϴ����ԣ��ںܶ�Ӧ���У�һ�����е����ݶ�����Ա���Ϊһ���������Դ����Ӷ����ټ��������������������

��ʵ������һ�������ճ�����ij�����Ϊ������ν��������ۣ�����Ⱥ�֡������ĵ�˼��Ҳ���Ǿ��ࡣ�������Dz��ϵظĽ�����ʶ�еľ���ģʽ��ѧϰ������ָ���������ˡ�ͬʱ����������Ѿ��㷺��Ӧ��������Ӧ���У�����ģʽʶ�����ݷ�����ͼ�����Լ��г��о���ͨ�����࣬��������ʶ���ܼ���ϡ���������ȫ�ֵķֲ�ģʽ���Լ���������֮�����Ȥ�����ϵ��

����ͬʱҲ�� Web Ӧ������Խ��Խ��Ҫ�����á���㷺ʹ�õļ��Ƕ� Web �ϵ��ĵ����з��࣬��֯��Ϣ�ķ��������û�һ����Ч������������ϵͳ���Ż���վ����ͬʱ���Լ���ʱ�����أ��������ָ��������ݵ���Ϣ��չ���������ҹ�ע������ͻ��⣬���߷���һ��ʱ�������Ƕ�ʲô�������ݱȽϸ���Ȥ����Щ��Ȥ��Ӧ�ö��ý����ھ���Ļ���֮�ϡ���Ϊһ�������ھ�Ĺ��ܣ������������Ϊ�����Ĺ�����������ݷֲ���������۲�ÿ���ص��ص㣬���ж��ض���ijЩ������һ���ķ��������⣬���������������Ϊ�����㷨��Ԥ�������裬����������߷���Ч�ʣ���Ҳ��������������ܾ��������Ŀ�ġ�

��ͬ�ľ�������

����һ���������⣬Ҫ��ѡ���ʺ����Ч���㷨�����Ҫ����ľ������Ȿ�������������������ǾʹӼ����������һ�¾������������

�������������Ļ��ǿ��ص���

Ϊ�˺ܺ�����������⣬������һ�����ӽ��з�����������ľ���������Ҫ�õ������أ���ϲ��ղķ˹��÷¡��Ӱ���û����͡���ϲ��ղķ˹��÷¡���û���������ʵ��һ�������ľ������⣬����һ���û�����Ҫô���ڡ�ϲ�����Ĵأ�Ҫô���ڲ�ϲ���Ĵء��������ľ��������ǡ�ϲ��ղķ˹��÷¡��Ӱ���û����͡�ϲ������ɶ��Ӱ���û�������ô��������������һ�����ص������⣬һ���û������Լ�ϲ��ղķ˹��÷¡��ϲ������ɶࡣ

�����������ĺ����ǣ�����һ��Ԫ�أ����Ƿ�������ھ������еĶ�����У�����ǣ�����һ�����ص��ľ������⣬�������ô��һ�������ľ������⡣

���ڲ�λ��ǻ��ڻ���

��ʵ�����뵽�ľ������ⶼ�ǡ����֡����⣬�����õ�һ�������һ����ԭ�����Ƿֳɲ�ͬ���飬���ǵ��͵Ļ��־������⡣�����˻��ڻ��ֵľ��࣬����һ�����ճ�������Ҳ�ܳ��������ͣ����ǻ��ڲ�εľ������⣬���ľ������ǽ���Щ����ֵȼ����ڶ��㽫������д��µķ��飬���ÿһ���ٱ���һ����ϸ�֣�Ҳ������·�����ն�Ҫ����һ������ʵ��������һ�֡��Զ����¡��IJ�ξ�������������Ӧ�ģ�Ҳ�С��Ե����ϡ��ġ���ʵ���Լ����⣬���Զ����¡�����һ������ϸ�����飬�����Ե����ϡ�����һ�����Ĺ鲢���顣

����Ŀ�̶��Ļ��������Ƶľ���

������Ժܺ����⣬������ľ�����������ִ�о����㷨ǰ�Ѿ�ȷ������Ľ��Ӧ�õõ����ٴأ����Ǹ������ݱ������������ɾ����㷨ѡ����ʵĴص���Ŀ��

���ھ��뻹�ǻ��ڸ��ʷֲ�ģ��

�ڱ�ϵ�еĵڶ�ƪ����Эͬ���˵������У������Ѿ���ϸ�����������Ժ;���ĸ�����ھ���ľ�������Ӧ�úܺ����⣬���ǽ�����������ƵĶ������һ��������������ڸ��ʷֲ�ģ�͵ģ����ܲ�̫�����⣬��ô������������ӡ�

һ�����ʷֲ�ģ�Ϳ����������� N ά�ռ��һ���ķֲ��������ǵķֲ���������һ�����������������һ���ض�����״�����ڸ��ʷֲ�ģ�͵ľ������⣬������һ������У��ҵ��ܷ����ض��ֲ�ģ�͵ĵ�ļ��ϣ����Dz�һ���Ǿ�������Ļ��������Ƶģ������������ij��ֳ����ʷֲ�ģ����������ģ�͡�

����ͼ 1 ������һ�����ӣ���ͬ��һ��㼯��Ӧ�ò�ͬ�ľ�����ԣ��õ���ȫ��ͬ�ľ��������������Ľ���ǻ��ھ���ģ����ĵ�ԭ����ǽ�������ĵ����һ���Ҳ�����Ļ��ڸ��ʷֲ�ģ�͵ľ�������������õĸ��ʷֲ�ģ����һ�����ȵ���Բ��ͼ��ר�ű����������ɫ�ĵ㣬������ľ���ܽ����ڻ��ھ���ľ����У������Ǿ���һ�����У������ڸ��ʷֲ�ģ�͵ľ��������Ƿ��ڲ�ͬ�����У�ֻ��Ϊ�������ض��ĸ��ʷֲ�ģ�ͣ���Ȼ�������������һ���Ƚϼ��˵����ӣ����������ǿ��Կ������ڻ��ڸ��ʷֲ�ģ�͵ľ�����������ģ�͵Ķ��壬��ͬ��ģ�Ϳ��ܵ�����ȫ��ͬ�ľ�������

ͼ 1 ���ھ���ͻ��ڸ��ʷֲ�ģ�͵ľ�������

��ҳ��

Apache Mahout �еľ���������

Apache Mahout �� Apache Software Foundation (ASF) ���µ�һ����Դ��Ŀ���ṩһЩ����չ�Ļ���ѧϰ�����㷨��ʵ�֣�ּ�ڰ���������Ա���ӷ����ݵش�������Ӧ�ó����ң��� Mahout ������汾�л������˶� Apache Hadoop ��֧�֣�ʹ��Щ�㷨���Ը���Ч���������Ƽ��㻷���С�

���� Apache Mahout �İ�װ��������ο������� Apache Mahout ������ữ�Ƽ����桷�����DZ��� 09 �귢����һƪ���ڻ��� Mahout ʵ���Ƽ������ developerWorks ���£�������ϸ������ Mahout �İ�װ���衣

Mahout ���ṩ�˳��õĶ��־����㷨���漰���Ǹո����۹��ĸ��������㷨�ľ���ʵ�֣��������Ǿͽ�һ�����뼸�����͵ľ����㷨��ԭ������ȱ���ʵ�ó������Լ����ʹ�� Mahout ��Ч��ʵ�����ǡ�

��������㷨

������ܾ����㷨֮ǰ�������ȶ� Mahout �жԸ��־������������ģ�ͽ��м�Ҫ�Ľ��ܡ�

����ģ��

Mahout �ľ����㷨�������ʾ��һ�ּ�����ģ�ͣ����� (Vector)�����������������Ļ����ϣ����ǿ������ɵļ�����������������ԣ��������������������ƶȼ��㣬��ϵ�е���һƪ����Эͬ�����㷨���������Ѿ���������ϸ�Ľ��ܣ���ο�����̽���Ƽ������ڲ������ܡ�ϵ�� - Part 2: �����Ƽ���������㷨 -- Эͬ���ˡ���

Mahout �е����� Vector ��һ��ÿ�����Ǹ����� (double) �ĸ��϶������������뵽��ʵ�־���һ�������������顣���ھ���Ӧ���������������������ݵIJ�ͬ��������Щ������ֵ���ܼ���ÿ������ֵ����Щ�����Ǻ�ϡ�裬����ֻ����������ֵ������ Mahout �ṩ�˶��ʵ�֣�

- DenseVector������ʵ�־���һ�����������飬���������������д洢���ʺ����ڴ洢�ܼ�������

- RandomAccessSparseVector ���ڸ������� HashMap ʵ�ֵģ�key ������ (int) ���ͣ�value �Ǹ����� (double) ���ͣ���ֻ�洢�����в�Ϊ�յ�ֵ�����ṩ������ʡ�

- SequentialAccessVector ʵ��Ϊ���� (int) ���ͺ����� (double) ���͵IJ������飬��Ҳֻ�洢�����в�Ϊ�յ�ֵ����ֻ�ṩ˳����ʡ�

�û����Ը����Լ��㷨������ѡ����ʵ�����ʵ���࣬����㷨��Ҫ�ܶ�������ʣ�Ӧ��ѡ�� DenseVector ��RandomAccessSparseVector������ֶ���˳����ʣ�SequentialAccessVector ��Ч��Ӧ�ø��á��������K-Means�㷨��SequentialAccessVector ��Ч�����á�

������������ʵ�֣��������ǿ�����ν����е����ݽ�ģ��������������ǡ���ζ����ݽ��������������Ա���� Mahout �ĸ��ָ�Ч�ľ����㷨��

- �����λ��͵�����

�����������ֻҪ����ͬ������������м��ɣ����� n ά�ռ�ĵ㣬��ʵ�������Ա�����Ϊһ��������

- ö����������

���������Ƕ������������ֻ��ȡֵ��Χ���ޡ��ٸ����ӣ���������һ��ƻ����Ϣ�����ݼ���ÿ��ƻ�������ݰ�������С����������ɫ�ȣ���������ɫΪ������ƻ������ɫ���ݰ�������ɫ����ɫ����ɫ���ڶ����ݽ��н�ģʱ�����ǿ�������������ʾ��ɫ����ɫ =1����ɫ =2����ɫ =3����ô��Сֱ�� 8cm������ 0.15kg����ɫ�Ǻ�ɫ��ƻ������ģ���������� <8, 0.15, 1>��

������嵥 1 �����˶������������ݽ��������������ӡ�

�嵥 1. ����������

// ����һ����ά�㼯��������

public static final double[][] points = { { 1, 1 }, { 2, 1 }, { 1, 2 },

{ 2, 2 }, { 3, 3 }, { 8, 8 }, { 9, 8 }, { 8, 9 }, { 9, 9 }, { 5, 5 },

{ 5, 6 }, { 6, 6 }};

public static List<Vector> getPointVectors(double[][] raw) {

List<Vector> points = new ArrayList<Vector>();

for (int i = 0; i < raw.length; i++) {

double[] fr = raw[i];

// ����ѡ�� RandomAccessSparseVector

Vector vec = new RandomAccessSparseVector(fr.length);

// �����ݴ���ڴ����� Vector ��

vec.assign(fr);

points.add(vec);

}

return points;

}

// ����ƻ����Ϣ���ݵ�������

public static List<Vector> generateAppleData() {

List<Vector> apples = new ArrayList<Vector>();

// ���ﴴ������ NamedVector����ʵ���������漸�� Vector �Ļ����ϣ�

//Ϊÿ�� Vector �ṩһ���ɶ�������

NamedVector apple = new NamedVector(new DenseVector(

new double[] {0.11, 510, 1}),

"Small round green apple");

apples.add(apple);

apple = new NamedVector(new DenseVector(new double[] {0.2, 650, 3}),

"Large oval red apple");

apples.add(apple);

apple = new NamedVector(new DenseVector(new double[] {0.09, 630, 1}),

"Small elongated red apple");

apples.add(apple);

apple = new NamedVector(new DenseVector(new double[] {0.25, 590, 3}),

"Large round yellow apple");

apples.add(apple);

apple = new NamedVector(new DenseVector(new double[] {0.18, 520, 2}),

"Medium oval green apple");

apples.add(apple);

return apples;

}

|

- �ı���Ϣ

��Ϊ�����㷨����ҪӦ�ó��� - �ı����࣬���ı���Ϣ�Ľ�ģҲ��һ�����������⡣����Ϣ�����о������Ѿ��кܺõĽ�ģ��ʽ��������Ϣ������������õ������ռ�ģ�� (Vector Space Model, VSM)����Ϊ�����ռ�ģ�Ͳ��DZ��ĵ��ص㣬�����һ����Ҫ�Ľ��ܣ�����Ȥ�����ѿ��Բ��IJο�Ŀ¼�и���������ĵ���

�ı��������ռ�ģ�;��ǽ��ı���Ϣ��ģΪһ������������ÿһ�������ı��г��ֵ�һ���ʵ�Ȩ�ء�����Ȩ�صļ������кܶ��У�

- ���Ī����ֱ�Ӽ��������Ǵ����ı�����ֵĴ��������ַ��������Ƕ��ı����������IJ�����ȷ��

- �ʵ�Ƶ�� (Team Frequency, TF)�����ǽ������ı��г��ֵ�Ƶ����Ϊ�ʵ�Ȩ�ء����ַ���ֻ�Ƕ���ֱ�Ӽ��������˹�һ��������Ŀ�����ò�ͬ���ȵ��ı�ģ����ͳһ��ȡֵ�ռ䣬�����ı����ƶȵıȽϣ������Կ����������ʹ�Ƶ�����ܽ������Ƶ������ʻ�Ȩ�ش�����⡱��Ҳ����˵����Ӣ���ı��У���a������the��������Ƶ����ʵ������Ĵʻ㲢û�н��й��ˣ��������ı�ģ���ڼ����ı����ƶ�ʱ��ܲ�ȷ��

- ��Ƶ - �����ı�Ƶ�� (Term Frequency �C Inverse Document Frequency, TF-IDF)�����Ƕ� TF ������һ�ּ�ǿ���ִʵ���Ҫ�����������ļ��г��ֵĴ������������ӣ���ͬʱ���������������ı��г��ֵ�Ƶ�ʳɷ����½����ٸ����ӣ����ڡ���Ƶ������ʻ㡱����Ϊ���Ǵֻ���������е��ı��У��������ǵ�Ȩ�ػ����ۿۣ�������ʹ���ı�ģ���������ı������ϸ��Ӿ�ȷ������Ϣ��������TF-IDF �Ƕ��ı���Ϣ��ģ����õķ�����

�����ı���Ϣ����������Mahout �Ѿ��ṩ�˹����࣬������ Lucene �����˶��ı���Ϣ���з�����Ȼ���ı�������������嵥 2 ������һ�����ӣ��������ı�������·�ṩ���������ݣ��ο���Դ����������ص�ַ�������ݼ����غ��ڡ�clustering/reuters��Ŀ¼�¡�

�嵥 2. �����ı���Ϣ������

public static void documentVectorize(String[] args) throws Exception{

//1. ��·�����ݽ�ѹ�� , Mahout �ṩ��ר�ŵķ���

DocumentClustering.extractReuters();

//2. �����ݴ洢�� SequenceFile����Ϊ��Щ����������� Hadoop �Ļ��������ģ���������������Ҫ������д

// �� SequenceFile���Ա��ȡ�ͼ���

DocumentClustering.transformToSequenceFile();

//3. �� SequenceFile �ļ��е����ݣ����� Lucene �Ĺ��߽���������

DocumentClustering.transformToVector();

}

public static void extractReuters(){

//ExtractReuters �ǻ��� Hadoop ��ʵ�֣�������Ҫ������������ļ�Ŀ¼���������������ǿ���ֱ�Ӱ���ӳ

// �䵽���DZ��ص�һ���ļ��У���ѹ������ݽ�д�����Ŀ¼��

File inputFolder = new File("clustering/reuters");

File outputFolder = new File("clustering/reuters-extracted");

ExtractReuters extractor = new ExtractReuters(inputFolder, outputFolder);

extractor.extract();

}

public static void transformToSequenceFile(){

//SequenceFilesFromDirectory ʵ�ֽ�ij���ļ�Ŀ¼�µ������ļ�д��һ�� SequenceFiles �Ĺ���

// ����ʵ������һ�������࣬����ֱ���������е��ã�����ֱ�ӵ��������� main ����

String[] args = {"-c", "UTF-8", "-i", "clustering/reuters-extracted/", "-o",

"clustering/reuters-seqfiles"};

// ����һ�²��������壺

// -c: ָ���ļ��ı�����ʽ�������õ���"UTF-8"

// -i: ָ��������ļ�Ŀ¼������ָ�����Ǹոյ����ļ���Ŀ¼

// -o: ָ��������ļ�Ŀ¼

try {

SequenceFilesFromDirectory.main(args);

} catch (Exception e) {

e.printStackTrace();

}

}

public static void transformToVector(){

//SparseVectorsFromSequenceFiles ʵ�ֽ� SequenceFiles �е����ݽ�����������

// ����ʵ������һ�������࣬����ֱ���������е��ã�����ֱ�ӵ��������� main ����

String[] args = {"-i", "clustering/reuters-seqfiles/", "-o",

"clustering/reuters-vectors-bigram", "-a",

"org.apache.lucene.analysis.WhitespaceAnalyzer"

, "-chunk", "200", "-wt", "tfidf", "-s", "5",

"-md", "3", "-x", "90", "-ng", "2", "-ml", "50", "-seq"};

// ����һ�²��������壺

// -i: ָ��������ļ�Ŀ¼������ָ�����Ǹո����� SequenceFiles ��Ŀ¼

// -o: ָ��������ļ�Ŀ¼

// -a: ָ��ʹ�õ� Analyzer�������õ��� lucene �Ŀո�ִʵ� Analyzer

// -chunk: ָ�� Chunk �Ĵ�С����λ�� M�����ڴ���ļ����ϣ����Dz���һ�� load �����ļ���������Ҫ

// �����ݽ����п�

// -wt: ָ������ʱ���õļ���Ȩ�ص�ģʽ������ѡ�� tfidf

// -s: ָ�������������ı����ϳ��ֵ����Ƶ�ȣ��������Ƶ�ȵĴʻ㽫������

// -md: ָ�������ڶ��ٲ�ͬ���ı��г��ֵ����ֵ���������ֵ�Ĵʻ㽫������

// -x: ָ����Ƶ�ʻ��������ʻ㣨���� is��a��the �ȣ��ij���Ƶ�����ޣ��������Ľ�������

// -ng: ָ���ִʺ��Ǵʻ����ȣ����� 1-gram ���ǣ�coca��cola�����������ʣ�

// 2-gram ʱ��coca cola ��һ���ʻ㣬2-gram �� 1-gram ��һ������·����ĸ�ȷ��

// -ml: ָ���ж����ڴ����Dz�������һ���ʻ�����ƶ���ֵ����ѡ�� >1-gram ʱ�����ã���ʵ�������

// Minimum Log Likelihood Ratio ����ֵ

// -seq: ָ�����ɵ������� SequentialAccessSparseVectors��û����ʱĬ�����ɻ���

// RandomAccessSparseVectors

try {

SparseVectorsFromSequenceFiles.main(args);

} catch (Exception e) {

e.printStackTrace();

}

}

|

���ﲹ��һ�㣬���ɵ��������ļ���Ŀ¼�ṹ�������ģ�

ͼ 2 �ı���Ϣ������

- df-count Ŀ¼���������ı���Ƶ����Ϣ

- tf-vectors Ŀ¼���������� TF ��ΪȨֵ���ı�����

- tfidf-vectors Ŀ¼���������� TFIDF ��ΪȨֵ���ı�����

- tokenized-documents Ŀ¼�������ŷִʹ�����ı���Ϣ

- wordcount Ŀ¼��������ȫ�ֵĴʻ���ֵĴ���

- dictionary.file-0 Ŀ¼����������Щ�ı��Ĵʻ��

- frequcency-file-0 Ŀ¼ : �����Ŵʻ����Ӧ��Ƶ����Ϣ��

���������������⣬������������������������㷨�����Ƚ��ܵ������� K ��ֵ�㷨��

K ��ֵ�����㷨

K ��ֵ�ǵ��͵Ļ��ھ���������Ļ��ַ���������һ�� n ����������ݼ��������Թ������ݵ� k �����֣�ÿ�����־���һ�����࣬���� k<=n��ͬʱ����Ҫ��������Ҫ��

- ÿ�������ٰ���һ������

- ÿ��������������ҽ�����һ���顣

K ��ֵ�Ļ���ԭ���������ģ����� k����Ҫ�����Ļ��ֵ���Ŀ��

- ���ȴ���һ����ʼ���֣������ѡ�� k ������ÿ�������ʼ�ش�����һ�������ġ����������Ķ�����������������ĵľ��룬�����Ǹ�������Ĵء�

- Ȼ�����һ�ֵ������ض�λ����������ͨ�������ڻ��ּ��ƶ����Ľ����֡���ν�ض�λ���������ǵ����µĶ������ػ������ж����뿪�ص�ʱ�����¼���ص�ƽ��ֵ��Ȼ��Զ���������·��䡣������̲����ظ���ֱ��û�д��ж���ı仯��

����������ܼ��ģ����Ҵغʹ�֮�������Ƚ�����ʱ��K ��ֵ��Ч���ȽϺá����ڴ��������ݼ�������㷨����Կ������ĺ�Ч�ģ����ĸ��Ӷ��� O(nkt)��n �Ƕ���ĸ�����k �Ǵص���Ŀ��t �ǵ����Ĵ�����ͨ�� k<<n���� t<<n�������㷨�����Ծֲ����Ž�����

K ��ֵ�����������Ҫ���û��������ȸ��� k �ĸ�����k ��ѡ��һ�㶼����һЩ����ֵ�Ͷ��ʵ���������ڲ�ͬ�����ݼ���k ��ȡֵû�пɽ���ԡ����⣬K ��ֵ�ԡ����������������������еģ�������������ݾ��ܶ�ƽ��ֵ��ɼ����Ӱ�졣

˵����ô�����۵�ԭ�����������ǻ��� Mahout ʵ��һ���� K ��ֵ�㷨�����ӡ���ǰ����ܵģ�Mahout �ṩ�˻����Ļ����ڴ��ʵ�ֺͻ��� Hadoop �� Map/Reduce ��ʵ�֣��ֱ��� KMeansClusterer �� KMeansDriver���������һ�������ӣ��ͻ����������嵥 1 �ﶨ��Ķ�ά�㼯���ݡ�

�嵥 3. K ��ֵ�����㷨ʾ��

// �����ڴ�� K ��ֵ�����㷨ʵ��

public static void kMeansClusterInMemoryKMeans(){

// ָ����Ҫ����ĸ���������ѡ�� 2 ��

int k = 2;

// ָ�� K ��ֵ�����㷨������������

int maxIter = 3;

// ָ�� K ��ֵ�����㷨����������ֵ

double distanceThreshold = 0.01;

// ����һ���������ķ���������ѡ����ŷ����¾���

DistanceMeasure measure = new EuclideanDistanceMeasure();

// ���ﹹ����������ʹ�õ����嵥 1 ��Ķ�ά�㼯

List<Vector> pointVectors = SimpleDataSet.getPointVectors(SimpleDataSet.points);

// �ӵ㼯�����������ѡ�� k ����Ϊ�ص�����

List<Vector> randomPoints = RandomSeedGenerator.chooseRandomPoints(pointVectors, k);

// ����ǰ��ѡ�е����Ĺ�����

List<Cluster> clusters = new ArrayList<Cluster>();

int clusterId = 0;

for(Vector v : randomPoints){

clusters.add(new Cluster(v, clusterId ++, measure));

}

// ���� KMeansClusterer.clusterPoints ����ִ�� K ��ֵ����

List<List<Cluster>> finalClusters = KMeansClusterer.clusterPoints(pointVectors,

clusters, measure, maxIter, distanceThreshold);

// ��ӡ���յľ�����

for(Cluster cluster : finalClusters.get(finalClusters.size() -1)){

System.out.println("Cluster id: " + cluster.getId() +

" center: " + cluster.getCenter().asFormatString());

System.out.println(" Points: " + cluster.getNumPoints());

}

}

// ���� Hadoop �� K ��ֵ�����㷨ʵ��

public static void kMeansClusterUsingMapReduce () throws Exception{

// ����һ���������ķ���������ѡ����ŷ����¾���

DistanceMeasure measure = new EuclideanDistanceMeasure();

// ָ������·������ǰ����ܵ�һ�������� Hadoop ��ʵ�־���ͨ��ָ������������ļ�·����ָ������Դ�ġ�

Path testpoints = new Path("testpoints");

Path output = new Path("output");

// ����������·���µ�����

HadoopUtil.overwriteOutput(testpoints);

HadoopUtil.overwriteOutput(output);

RandomUtils.useTestSeed();

// ������·�������ɵ㼯�����ڴ�ķ�����ͬ��������Ҫ�����е�����д���ļ�������������������

SimpleDataSet.writePointsToFile(testpoints);

// ָ����Ҫ����ĸ���������ѡ�� 2 ��

int k = 2;

// ָ�� K ��ֵ�����㷨������������

int maxIter = 3;

// ָ�� K ��ֵ�����㷨����������ֵ

double distanceThreshold = 0.01;

// �����ѡ�� k ����Ϊ�ص�����

Path clusters = RandomSeedGenerator.buildRandom(testpoints,

new Path(output, "clusters-0"), k, measure);

// ���� KMeansDriver.runJob ����ִ�� K ��ֵ�����㷨

KMeansDriver.runJob(testpoints, clusters, output, measure,

distanceThreshold, maxIter, 1, true, true);

// ���� ClusterDumper �� printClusters ��������������ӡ������

ClusterDumper clusterDumper = new ClusterDumper(new Path(output,

"clusters-" + maxIter -1), new Path(output, "clusteredPoints"));

clusterDumper.printClusters(null);

}

//SimpleDataSet �� writePointsToFile �����������Ե㼯д���ļ���

// �������ǽ����Ե㼯��װ�� VectorWritable ��ʽ���Ӷ�������д���ļ�

public static List<VectorWritable> getPoints(double[][] raw) {

List<VectorWritable> points = new ArrayList<VectorWritable>();

for (int i = 0; i < raw.length; i++) {

double[] fr = raw[i];

Vector vec = new RandomAccessSparseVector(fr.length);

vec.assign(fr);

// ֻ���ڼ���㼯ǰ���� RandomAccessSparseVector �����һ�� VectorWritable �İ�װ

points.add(new VectorWritable(vec));

}

return points;

}

// �� VectorWritable �ĵ㼯д���ļ��������漰һЩ������ Hadoop ���Ԫ�أ���ϸ������IJο���Դ����ص�����

public static void writePointsToFile(Path output) throws IOException {

// ����ǰ��ķ������ɵ㼯

List<VectorWritable> pointVectors = getPoints(points);

// ���� Hadoop �Ļ�������

Configuration conf = new Configuration();

// ���� Hadoop �ļ�ϵͳ���� FileSystem

FileSystem fs = FileSystem.get(output.toUri(), conf);

// ����һ�� SequenceFile.Writer�������� Vector д���ļ���

SequenceFile.Writer writer = new SequenceFile.Writer(fs, conf, output,

Text.class, VectorWritable.class);

// ���ォ���������ı���ʽд���ļ�

try {

for (VectorWritable vw : pointVectors) {

writer.append(new Text(), vw);

}

} finally {

writer.close();

}

}

ִ�н��

KMeans Clustering In Memory Result

Cluster id: 0

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[1.8,1.8,0.0],\"state\":[1,1,0],

\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,\"highWaterMark\":1,

\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,\"lengthSquared\":-1.0}"}

Points: 5

Cluster id: 1

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],

\"values\":[7.142857142857143,7.285714285714286,0.0],\"state\":[1,1,0],

\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,\"highWaterMark\":1,

\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,\"lengthSquared\":-1.0}"}

Points: 7

KMeans Clustering Using Map/Reduce Result

Weight: Point:

1.0: [1.000, 1.000]

1.0: [2.000, 1.000]

1.0: [1.000, 2.000]

1.0: [2.000, 2.000]

1.0: [3.000, 3.000]

Weight: Point:

1.0: [8.000, 8.000]

1.0: [9.000, 8.000]

1.0: [8.000, 9.000]

1.0: [9.000, 9.000]

1.0: [5.000, 5.000]

1.0: [5.000, 6.000]

1.0: [6.000, 6.000]

|

1) Path Input �� ���д���������ݵ��·������������ȱ

2) Path clusters ���洢ÿ�������ĵ�·������������ȱ

3) Path output ���������洢��·������������ȱ�����ָ���˴صĸ��������·�����ļ���Ϊ��

4) DistanceMeasure measure �����ݵ��ľ�����㷽����������ȱ��Ĭ���� SquaredEuclidean �㷽��

�ṩ����ֵ: ChebyshevDistanceMeasure �б�ѩ�����

CosineDistanceMeasure ���Ҿ���

EuclideanDistanceMeasure ŷ�Ͼ���

MahalanobisDistanceMeasure ���Ͼ���

ManhattanDistanceMeasure �����پ���

MinkowskiDistanceMeasure �ɿɷ�˹������

SquaredEuclideanDistanceMeasure ŷ�Ͼ��� ( ����ȡƽ���� )

TanimotoDistanceMeasure Tanimoto ϵ������

����һЩ����Ȩ�صľ�����㷽����

WeightedDistanceMeasure

WeightedEuclideanDistanceMeasure �� WeightedManhattanDistanceMeasure

5) Double convergenceDelta: ����ϵ�� �µĴ��������ϴεĴ����ĵĵľ��벻�ܳ��� convergenceDelta ��������������������������ֹͣ������������ȱ��Ĭ��ֵ�� 0.5

6) int maxIterations �� �����������������������С�� maxIterations ����������������ֹͣ������ 5) �е�convergenceDelta �����κ�һ��ֹͣ��������������ֹͣ��������������ȱ��

7) boolean runClustering ������� true ���ڼ�������ĺ���ÿ�����ݵ������ĸ��أ������������ĺ������������ȱ��Ĭ��Ϊ true

8) clusteringOption �����õ������� Map/Reduce �ķ������㡣������ȱ��Ĭ���� mapreduce ��

9) int numClustersOption ���صĸ�����������ȱ��

������ K ��ֵ�����㷨�����ǿ��Կ����������ŵ��ǣ�ԭ����ʵ������Ҳ��Լ�ͬʱִ��Ч�ʺͶ��ڴ��������Ŀ������Ի��ǽ�ǿ�ġ�Ȼ��ȱ��Ҳ�Ǻ���ȷ�ģ���������Ҫ�û���ִ�о���֮ǰ������ȷ�ľ�����������ã���һ�����û��ڴ���������ʱ����̫��������֪���ģ�һ����Ҫͨ����������ҳ�һ�����ŵ� K ֵ����ξ��ǣ������㷨���ʼ�������ѡ���ʼ�������ĵķ����������㷨����������������������ϲ��ν�������Ǵ���������д�������ݣ�����������ָ���������ݾ����Զ�������Խϵ͵����ݡ����� K ��ֵ�㷨��һ����������������ʼ��ѡ�������ģ��Ժ�������������̽������ܴ�����⣬��ô������ʲô���������ȿ����ҳ�Ӧ��ѡ����ٸ��أ�ͬʱ�ҵ��ص����ģ��������Դ���Ż� K ��ֵ�����㷨��Ч�ʣ��������Ǿͽ�����һ���������Canopy �����㷨��

Canopy �����㷨

Canopy �����㷨�Ļ���ԭ���ǣ�����Ӧ�óɱ��͵Ľ��Ƶľ�����㷽����Ч�Ľ����ݷ�Ϊ����飬�����Ϊһ�� Canopy�����**��ҽ�������Ϊ�����ǡ���Canopy ֮��������ص��IJ��֣�Ȼ������ϸ�ľ�����㷽ʽȷ�ļ�����ͬһ Canopy �еĵ㣬�����Ƿ���������ʵĴ��С�Canopy �����㷨�������� K ��ֵ�����㷨��Ԥ�����������Һ��ʵ� k ֵ�ʹ����ġ�

������ϸ����һ�´��� Canopy �Ĺ��̣���ʼ������������һ��㼯 S������Ԥ��������������ֵ��T1��T2��T1>T2����Ȼ��ѡ��һ���㣬�������� S ��������ľ��루������óɱ��ܵ͵ļ��㷽�������������� T1 ���ڵķ���һ�� Canopy �У�ͬʱ�� S ��ȥ����Щ��˵������ T2 ���ڵĵ㣨������Ϊ�˱�֤�����ľ����� T2 ���ڵĵ㲻������Ϊ���� Canopy �����ģ����ظ���������ֱ�� S Ϊ��Ϊֹ��

�� K ��ֵ��ʵ��һ����Mahout Ҳ�ṩ������ Canopy �����ʵ�֣��������ǾͿ�������Ĵ������ӡ�

�嵥 4. Canopy �����㷨ʾ��

//Canopy �����㷨���ڴ�ʵ��

public static void canopyClusterInMemory () {

// ���þ�����ֵ T1,T2

double T1 = 4.0;

double T2 = 3.0;

// ���� CanopyClusterer.createCanopies �������� Canopy�������ֱ��ǣ�

// 1. ��Ҫ����ĵ㼯

// 2. ������㷽��

// 3. ������ֵ T1 �� T2

List<Canopy> canopies = CanopyClusterer.createCanopies(

SimpleDataSet.getPointVectors(SimpleDataSet.points),

new EuclideanDistanceMeasure(), T1, T2);

// ��ӡ������ Canopy����Ϊ��������ܼ���������û�н�����һ����ȷ�ľ��ࡣ

// �б����ʱ�����õ� Canopy ����Ľ����Ϊ K ��ֵ��������룬�ܸ���ȷ����Ч�Ľ����������

for(Canopy canopy : canopies) {

System.out.println("Cluster id: " + canopy.getId() +

" center: " + canopy.getCenter().asFormatString());

System.out.println(" Points: " + canopy.getNumPoints());

}

}

//Canopy �����㷨�� Hadoop ʵ��

public static void canopyClusterUsingMapReduce() throws Exception{

// ���þ�����ֵ T1,T2

double T1 = 4.0;

double T2 = 3.0;

// �����������ķ���

DistanceMeasure measure = new EuclideanDistanceMeasure();

// ��������������ļ�·��

Path testpoints = new Path("testpoints");

Path output = new Path("output");

// ����������·���µ�����

HadoopUtil.overwriteOutput(testpoints);

HadoopUtil.overwriteOutput(output);

// �����Ե㼯д������Ŀ¼��

SimpleDataSet.writePointsToFile(testpoints);

// ���� CanopyDriver.buildClusters �ķ���ִ�� Canopy ���࣬�����ǣ�

// 1. ����·�������·��

// 2. �������ķ���

// 3. ������ֵ T1 �� T2

new CanopyDriver().buildClusters(testpoints, output, measure, T1, T2, true);

// ��ӡ Canopy ����Ľ��

List<List<Cluster>> clustersM = DisplayClustering.loadClusters(output);

List<Cluster> clusters = clustersM.get(clustersM.size()-1);

if(clusters != null){

for(Cluster canopy : clusters) {

System.out.println("Cluster id: " + canopy.getId() +

" center: " + canopy.getCenter().asFormatString());

System.out.println(" Points: " + canopy.getNumPoints());

}

}

}

ִ�н��

Canopy Clustering In Memory Result

Cluster id: 0

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[1.8,1.8,0.0],

\"state\":[1,1,0],\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,

\"highWaterMark\":1,\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},

\"size\":2,\"lengthSquared\":-1.0}"}

Points: 5

Cluster id: 1

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[7.5,7.666666666666667,0.0],

\"state\":[1,1,0],\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,

\"highWaterMark\":1,\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,

\"lengthSquared\":-1.0}"}

Points: 6

Cluster id: 2

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[5.0,5.5,0.0],

\"state\":[1,1,0],\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,

\"highWaterMark\":1,\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,

\"lengthSquared\":-1.0}"}

Points: 2

Canopy Clustering Using Map/Reduce Result

Cluster id: 0

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[1.8,1.8,0.0],

\"state\":[1,1,0],\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,

\"highWaterMark\":1,\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},

\"size\":2,\"lengthSquared\":-1.0}"}

Points: 5

Cluster id: 1

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],\"values\":[7.5,7.666666666666667,0.0],

\"state\":[1,1,0],\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,

\"highWaterMark\":1,\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,

\"lengthSquared\":-1.0}"}

Points: 6

Cluster id: 2

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],

\"values\":[5.333333333333333,5.666666666666667,0.0],\"state\":[1,1,0],

\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,\"highWaterMark\":1,

\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,\"lengthSquared\":-1.0}"}

Points: 3

|

ģ�� K ��ֵ�����㷨

ģ�� K ��ֵ�����㷨�� K ��ֵ�������չ�����Ļ���ԭ���� K ��ֵһ����ֻ�����ľ������������ڶ������ڶ���أ�Ҳ����˵������������ǰ����ܹ��Ŀ��ص������㷨��Ϊ����������ģ�� K ��ֵ�� K ��ֵ�������������ǵû�Щʱ���˽�һ�����ģ��������Fuzziness Factor����

�� K ��ֵ����ԭ�����ƣ�ģ�� K ��ֵҲ���ڴ������������������ѭ���������������ǽ������������������Ĵأ����Ǽ�������������ص�����ԣ�Association����������һ������ v���� k ���أ�v �� k �������ĵľ���ֱ��� d1��d2�� dk����ô V ����һ���ص������ u1����ͨ���������ʽ���㣺

���� v �������ص������ֻ�轫 d1�滻Ϊ��Ӧ�ľ��롣

���������ʽ�����ǿ������� m ���� 2 ʱ������Խ��� 1���� m ���� 1 ʱ������Խ����ڵ��ôصľ��룬���� m ��ȡֵ�ڣ�1��2�������ڣ��� m Խ��ģ���̶�Խ��m �������Ǹո��ᵽ��ģ��������

������ô�����۵�ԭ�����������ǿ������ʹ�� Mahout ʵ��ģ�� K ��ֵ���࣬ͬǰ��ķ���һ����Mahout һ���ṩ�˻����ڴ�ͻ��� Hadoop Map/Reduce ������ʵ�� FuzzyKMeansClusterer �� FuzzyMeansDriver���ֱ����嵥 5 ������һ�����ӡ�

�嵥 5. ģ�� K ��ֵ�����㷨ʾ��

public static void fuzzyKMeansClusterInMemory() {

// ָ������ĸ���

int k = 2;

// ָ�� K ��ֵ�����㷨������������

int maxIter = 3;

// ָ�� K ��ֵ�����㷨����������ֵ

double distanceThreshold = 0.01;

// ָ��ģ�� K ��ֵ�����㷨��ģ������

float fuzzificationFactor = 10;

// ����һ���������ķ���������ѡ����ŷ����¾���

DistanceMeasure measure = new EuclideanDistanceMeasure();

// ������������ʹ�õ����嵥 1 ��Ķ�ά�㼯

List<Vector> pointVectors = SimpleDataSet.getPointVectors(SimpleDataSet.points);

// �ӵ㼯�����������ѡ�� k ����Ϊ�ص�����

List<Vector> randomPoints = RandomSeedGenerator.chooseRandomPoints(points, k);

// ������ʼ�أ������� K ��ֵ��ͬ��ʹ���� SoftCluster����ʾ���ǿ��ص���

List<SoftCluster> clusters = new ArrayList<SoftCluster>();

int clusterId = 0;

for (Vector v : randomPoints) {

clusters.add(new SoftCluster(v, clusterId++, measure));

}

// ���� FuzzyKMeansClusterer �� clusterPoints ��������ģ�� K ��ֵ����

List<List<SoftCluster>> finalClusters =

FuzzyKMeansClusterer.clusterPoints(points,

clusters, measure, distanceThreshold, maxIter, fuzzificationFactor);

// ��ӡ������

for(SoftCluster cluster : finalClusters.get(finalClusters.size() - 1)) {

System.out.println("Fuzzy Cluster id: " + cluster.getId() +

" center: " + cluster.getCenter().asFormatString());

}

}

public class fuzzyKMeansClusterUsingMapReduce {

// ָ��ģ�� K ��ֵ�����㷨��ģ������

float fuzzificationFactor = 2.0f;

// ָ����Ҫ����ĸ���������ѡ�� 2 ��

int k = 2;

// ָ������������

int maxIter = 3;

// ָ����������ֵ

double distanceThreshold = 0.01;

// ����һ���������ķ���������ѡ����ŷ����¾���

DistanceMeasure measure = new EuclideanDistanceMeasure();

// ��������������ļ�·��

Path testpoints = new Path("testpoints");

Path output = new Path("output");

// ����������·���µ�����

HadoopUtil.overwriteOutput(testpoints);

HadoopUtil.overwriteOutput(output);

// �����Ե㼯д������Ŀ¼��

SimpleDataSet.writePointsToFile(testpoints);

// �����ѡ�� k ����Ϊ�ص�����

Path clusters = RandomSeedGenerator.buildRandom(testpoints,

new Path(output, "clusters-0"), k, measure);

FuzzyKMeansDriver.runJob(testpoints, clusters, output, measure, 0.5, maxIter, 1,

fuzzificationFactor, true, true, distanceThreshold, true);

// ��ӡģ�� K ��ֵ����Ľ��

ClusterDumper clusterDumper = new ClusterDumper(new Path(output, "clusters-" +

maxIter ),new Path(output, "clusteredPoints"));

clusterDumper.printClusters(null);

}

ִ�н��

Fuzzy KMeans Clustering In Memory Result

Fuzzy Cluster id: 0

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],

\"values\":[1.9750483367699223,1.993870669568863,0.0],\"state\":[1,1,0],

\"freeEntries\":1,\"distinct\":2,\"lowWaterMark\":0,\"highWaterMark\":1,

\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,\"lengthSquared\":-1.0}"}

Fuzzy Cluster id: 1

center:{"class":"org.apache.mahout.math.RandomAccessSparseVector",

"vector":"{\"values\":{\"table\":[0,1,0],

\"values\":[7.924827516566109,7.982356511917616,0.0],\"state\":[1,1,0],

\"freeEntries\":1, \"distinct\":2,\"lowWaterMark\":0,\"highWaterMark\":1,

\"minLoadFactor\":0.2,\"maxLoadFactor\":0.5},\"size\":2,\"lengthSquared\":-1.0}"}

Funzy KMeans Clustering Using Map Reduce Result

Weight: Point:

0.9999249428064162: [8.000, 8.000]

0.9855340718746096: [9.000, 8.000]

0.9869963781734195: [8.000, 9.000]

0.9765978701133124: [9.000, 9.000]

0.6280999013864511: [5.000, 6.000]

0.7826097471578298: [6.000, 6.000]

Weight: Point:

0.9672607354172386: [1.000, 1.000]

0.9794914088151625: [2.000, 1.000]

0.9803932521191389: [1.000, 2.000]

0.9977806183197744: [2.000, 2.000]

0.9793701109946826: [3.000, 3.000]

0.5422929338028506: [5.000, 5.000]

|

�����������㷨

ǰ����ܵ����־����㷨���ǻ��ڻ��ֵģ��������Ǽ�Ҫ����һ�����ڸ��ʷֲ�ģ�͵ľ����㷨�����������ࣨDirichlet Processes Clustering����

���������ȼ�Ҫ����һ�»��ڸ��ʷֲ�ģ�͵ľ����㷨�������ƻ���ģ�͵ľ����㷨����ԭ����������Ҫ����һ���ֲ�ģ�ͣ������磺Բ�Σ������εȣ����ӵ���������ֲ������ɷֲ��ȣ�Ȼ����ģ�Ͷ����ݽ��з��࣬����ͬ�Ķ������һ��ģ�ͣ�ģ�ͻ���������������ÿһ�ֹ�����Ҫ��ģ�͵ĸ��������������¼��㣬ͬʱ���ƶ����������ģ�͵ĸ��ʡ�����˵������ģ�͵ľ����㷨�ĺ����Ƕ���ģ�ͣ�����һ���������⣬ģ�Ͷ��������ֱ��Ӱ���˾���Ľ�����������һ�������ӣ��������ǵ������ǽ�һЩ��ά�ĵ�ֳ����飬��ͼ���ò�ͬ����ɫ��ʾ��ͼ A �Dz���Բ��ģ�͵ľ�������ͼ B �Dz���������ģ�͵ľ����������Կ�����Բ��ģ����һ����ȷ��ѡ��������ģ�͵Ľ��������©�������У���һ�������ѡ��

ͼ 3 ���ò�ͬģ�͵ľ�����

Mahout ʵ�ֵĵ����������㷨�ǰ������¹��̹����ģ����ȣ�������һ�������Ķ����һ���ֲ�ģ�͡��� Mahout ��ʹ�� ModelDistribution ���ɸ���ģ�͡���ʼ״̬��������һ���յ�ģ�ͣ�Ȼ���Խ��������ģ���У�Ȼ��һ��һ����������������ڸ���ģ�͵ĸ��ʡ������嵥�����˻����ڴ�ʵ�ֵĵ����������㷨��

�嵥 6. �����������㷨ʾ��

public static void DirichletProcessesClusterInMemory() {

// ָ�����������㷨�� alpha ����������һ�����ɲ�����ʹ�ö���ֲ��ڲ�ͬģ��ǰ���ܽ��й⻬�Ĺ���

double alphaValue = 1.0;

// ָ������ģ�͵ĸ���

int numModels = 3;

// ָ�� thin �� burn ������������������ڽ��;�������е��ڴ�ʹ������

int thinIntervals = 2;

int burnIntervals = 2;

// ָ������������

int maxIter = 3;

List<VectorWritable> pointVectors =

SimpleDataSet.getPoints(SimpleDataSet.points);

// ��ʼ�����ɿշֲ�ģ�ͣ������õ��� NormalModelDistribution

ModelDistribution<VectorWritable> model =

new NormalModelDistribution(new VectorWritable(new DenseVector(2)));

// ִ�о���

DirichletClusterer dc = new DirichletClusterer(pointVectors, model, alphaValue,

numModels, thinIntervals, burnIntervals);

List<Cluster[]> result = dc.cluster(maxIter);

// ��ӡ������

for(Cluster cluster : result.get(result.size() -1)){

System.out.println("Cluster id: " + cluster.getId() + " center: " +

cluster.getCenter().asFormatString());

System.out.println(" Points: " + cluster.getNumPoints());

}

}

ִ�н��

Dirichlet Processes Clustering In Memory Result

Cluster id: 0

center:{"class":"org.apache.mahout.math.DenseVector",

"vector":"{\"values\":[5.2727272727272725,5.2727272727272725],

\"size\":2,\"lengthSquared\":-1.0}"}

Points: 11

Cluster id: 1

center:{"class":"org.apache.mahout.math.DenseVector",

"vector":"{\"values\":[1.0,2.0],\"size\":2,\"lengthSquared\":-1.0}"}

Points: 1

Cluster id: 2

center:{"class":"org.apache.mahout.math.DenseVector",

"vector":"{\"values\":[9.0,8.0],\"size\":2,\"lengthSquared\":-1.0}"}

Points: 0

|

Mahout ���ṩ���ָ��ʷֲ�ģ�͵�ʵ�֣����Ƕ��̳� ModelDistribution����ͼ 4 ��ʾ���û����Ը����Լ������ݼ�������ѡ����ʵ�ģ�ͣ���ϸ�Ľ�����ο� Mahout �Ĺٷ��ĵ���

ͼ 4 Mahout �еĸ��ʷֲ�ģ�Ͳ�νṹ

Mahout �����㷨�ܽ�

ǰ����ϸ������ Mahout �ṩ�����־����㷨��������һ����Ҫ���ܽᣬ���������㷨��ȱ�㣬��ʵ���������������⣬Mahout ���ṩ��һЩ�Ƚϸ��ӵľ����㷨������Ͳ�һһ��ϸ�����ˣ���ϸ��Ϣ��ο� Mahout Wiki �ϸ����ľ����㷨��ϸ���ܡ�

�� 1 Mahout �����㷨�ܽ�

| �㷨 |

�ڴ�ʵ�� |

Map/Reduce ʵ�� |

�ظ�����ȷ���� |

���Ƿ������ص� |

| K ��ֵ |

KMeansClusterer |

KMeansDriver |

Y |

N |

| Canopy |

CanopyClusterer |

CanopyDriver |

N |

N |

| ģ�� K ��ֵ |

FuzzyKMeansClusterer |

FuzzyKMeansDriver |

Y |

Y |

| �������� |

DirichletClusterer |

DirichletDriver |

N |

Y |

�ܽ�

�����㷨���㷺����������Ϣ���ܴ���ϵͳ���������ȼ����˾������������㷨˼�룬ʹ�ö����������˽������һ��Ҫ�ļ�����Ȼ���ʵ�ʹ���Ӧ�õĽǶȳ���������Ľ����˿�Դ���� Apache Mahout �й��ھ����ʵ�ֿ�ܣ����������е���ѧģ�ͣ����־����㷨�Լ��ڲ�ͬ�����ܹ��ϵ�ʵ�֡�ͨ������ʾ�������߿���֪����������ض����������⣬��ô�����������ݣ���ô��ѡ����ֲ�ͬ�ľ����㷨��

��ϵ�е���һƪ�����������˽��Ƽ����������㷨 -- ���ࡣ�����һ��������Ҳ��һ�������ھ�ľ������⣬��Ҫ������ȡ������Ҫ�������ģ�ͣ�������ǿ��Ը������ģ�ͽ���Ԥ�⣬�Ƽ�����һ��Ԥ�����Ϊ��ͬʱ����ͷ�������Ҳ���ศ��ɵģ����Ƕ�Ϊ�ں��������Ͻ��и�Ч���Ƽ��ṩ���������Ա�ϵ�е���һƪ���½���ϸ���ܸ�������㷨�����ǵ�ԭ������ȱ���ʵ�ó��������������� Apache Mahout �ķ����㷨�ĸ�Чʵ�֡�

����

������ͳ�ľ����Ѿ��Ƚϳɹ��Ľ���˵�ά���ݵľ������⡣��������ʵ��Ӧ�������ݵĸ����ԣ��ڴ�����������ʱ�����е��㷨����ʧЧ���ر��Ƕ��ڸ�ά���ݺʹ������ݵ��������Ϊ��ͳ������ڸ�ά���ݼ��н��о���ʱ����Ҫ�����������⡣�ٸ�ά���ݼ��д��ڴ����ص�����ʹ��������ά�д��ڴصĿ����Լ���Ϊ�㣻�ڸ�ά�ռ������ݽϵ�ά�ռ������ݷֲ�Ҫϡ�裬�������ݼ���뼸��������ձ�������ͳ������ǻ��ھ�����о���ģ�����ڸ�ά�ռ��������ھ����������ء�

����ά��������ѳ�Ϊ���������һ����Ҫ�о�����ͬʱ��ά���ݾ���Ҳ�Ǿ��༼�����ѵ㡣���ż����Ľ���ʹ�������ռ����Խ��Խ���ף��������ݿ��ģԽ��Խ������Խ��Խ�ߣ���������͵�ó�������ݡ�Web �ĵ�������������ݵȣ����ǵ�ά�ȣ����ԣ�ͨ�����Դﵽ�ɰ���ǧά���������ߡ����ǣ��ܡ�ά��ЧӦ����Ӱ�죬�����ڵ�ά���ݿռ�������õľ���������ڸ�ά�ռ�����������úõľ���Ч������ά���ݾ�������Ǿ��������һ���dz���Ծ������ͬʱ��Ҳ��һ��������ս�ԵĹ�����Ŀǰ����ά���ݾ���������г���������Ϣ��ȫ�����ڡ����֡����ֵȷ��涼�кܹ㷺��Ӧ�á�

http://www.cnblogs.com/shipengzhi/articles/2489389.html

=========

Minhash based clustering

https://issues.apache.org/jira/browse/MAHOUT-344

========

How to improve clustering?

http://comments.gmane.org/gmane.comp.apache.mahout.user/16296

========

mahout��k-means���ӵ�����

|

|

| | | |